「双安定図形」ネタばかりで申し訳ございませんが、知覚がコンセプトの会社なのでお許しください

Pythonのコードを共有できそうなので第3段いきます

・第1弾は基礎

・第2段は脳波計とニューラルネットワークによる分類の研究の紹介

・第3段(今回)は検証です

やっていきます

環境

Windows

Anaconda

検証:AIはうさぎとアヒルに見える「双安定図形」を判別できるのか

まずは、download.pyで必要な画像をFlickerAPIを用いてダウンロードします

keyは取得してください

download.py

from wave import Wave_write

from sympy import sec

from flickrapi import FlickrAPI

from urllib.request import urlretrieve

from pprint import pprint

import os, time, sys

key = "<FLICKER_API_KEY>"

secret = "de7acaae83fb82c2"

wait_time = 1

animalname = 'rabbit' # ['duck', 'rabbbit'] sys.argv[1]

savedir = "./" + animalname

flickr = FlickrAPI(key, secret, format='parsed-json')

result = flickr.photos.search(

text = animalname,

per_page = 400,

media = 'photos',

sort = 'relevance',

safe_search = 1,

extras = 'url_q, licence'

)

photos = result['photos']

# pprint(photos)

for i, photo in enumerate(photos['photo']):

url_q = photo['url_q']

filepath = savedir + '/' + photo['id'] + '.jpg'

if os.path.exists(filepath): continue

urlretrieve(url_q, filepath)

time.sleep(wait_time)

データを作成します

gen_data.py

from PIL import Image

import os, glob

import numpy as np

from sklearn import model_selection

from sklearn.utils import shuffle

classes = ["duck", "rabbit"]

num_classes = len(classes)

image_size = 50

num_testdata = 100

X = []

y = []

for index, classlabel in enumerate(classes):

photos_dir = "./" + classlabel

files = glob.glob(photos_dir + "/*.jpg")

for i, file in enumerate(files):

if i >= 200:

break

image = Image.open(file)

image = image.convert("RGB")

image = image.resize((image_size, image_size))

data = np.asarray(image)

X.append(data)

y.append(index)

X = np.array(X, dtype=np.float32)

y = np.array(y, dtype=np.int32)

X, y = shuffle(X, y, random_state=42)

X_train, X_test, y_train, y_test = model_selection.train_test_split(X, y, test_size=0.2, random_state=42)

X_train = X_train / 255.0

X_test = X_test / 255.0

xy = (X_train, X_test, y_train, y_test)

np.save("./animal_aug.npy", xy)トレーニングします

cnn.py

import tensorflow as tf

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import Conv2D, MaxPooling2D

from tensorflow.keras.layers import Activation, Dropout, Flatten, Dense

import numpy as np

num_classes = 2

def main():

X_train, X_test, y_train, y_test = np.load("./animal_aug.npy", allow_pickle=True)

y_train = tf.keras.utils.to_categorical(y_train, num_classes)

y_test = tf.keras.utils.to_categorical(y_test, num_classes)

model = model_train(X_train, y_train)

model_eval(model, X_test, y_test)

def model_train(X, y):

model = Sequential()

model.add(Conv2D(32, (3, 3), padding='same', input_shape=X.shape[1:]))

model.add(Activation('relu'))

model.add(Conv2D(32, (3, 3)))

model.add(Activation('relu'))

model.add(MaxPooling2D(pool_size=(2, 2)))

model.add(Dropout(0.25))

model.add(Conv2D(64, (3, 3), padding='same'))

model.add(Activation('relu'))

model.add(Conv2D(64, (3, 3)))

model.add(Activation('relu'))

model.add(MaxPooling2D(pool_size=(2, 2)))

model.add(Dropout(0.25))

model.add(Flatten())

model.add(Dense(512))

model.add(Activation('relu'))

model.add(Dropout(0.5))

model.add(Dense(num_classes))

model.add(Activation('softmax'))

opt = tf.keras.optimizers.RMSprop(learning_rate=0.0001, rho=0.9)

model.compile(loss='categorical_crossentropy', optimizer=opt, metrics=['accuracy'])

model.fit(X, y, batch_size=32, epochs=100)

model.save('./animal_cnn_aug.h5')

return model

def model_eval(model, X, y):

scores = model.evaluate(X, y, verbose=1)

print('Test Loss:', scores[0])

print('Test Accuracy:', scores[1])

if __name__ == "__main__":

main()

予測します

predict.py

import tensorflow as tf

from keras.models import Sequential, load_model

from keras.layers import Conv2D, MaxPooling2D

from keras.layers import Activation, Dropout, Flatten, Dense

from keras.utils import np_utils

import keras, sys

import numpy as np

from PIL import Image

classes = ["duck", "rabbit"]

num_classes = len(classes)

image_size = 50

def build_model():

model = Sequential()

model.add(Conv2D(32,(3,3), padding='same',input_shape=(50,50,3)))

model.add(Activation('relu'))

model.add(Conv2D(32,(3,3)))

model.add(Activation('relu'))

model.add(MaxPooling2D(pool_size=(2,2)))

model.add(Dropout(0.25))

model.add(Conv2D(64,(3,3), padding='same'))

model.add(Activation('relu'))

model.add(Conv2D(64,(3,3)))

model.add(Activation('relu'))

model.add(MaxPooling2D(pool_size=(2,2)))

model.add(Dropout(0.25))

model.add(Flatten())

model.add(Dense(512))

model.add(Activation('relu'))

model.add(Dropout(0.5))

model.add(Dense(2))

model.add(Activation('softmax'))

opt = tf.keras.optimizers.RMSprop(learning_rate=0.0001)

model.compile(loss='categorical_crossentropy',optimizer=opt,metrics=['accuracy'])

model = load_model('./animal_cnn_aug.h5')

return model

def main():

image = Image.open(sys.argv[1])

image = image.convert('RGB')

image = image.resize((image_size,image_size))

data = np.asarray(image)

X = []

X.append(data)

X = np.array(X)

model = build_model()

result = model.predict([X])[0]

predicted = result.argmax()

percentage = int(result[predicted] * 100)

print("{0} ({1} %)".format(classes[predicted], percentage))

if __name__ == '__main__':

main()

結果を見ましょう

手順は以下です

$ python download.py$ python gen_data.py$ python cnn.py$ python predict.py test.jpgうさぎかアヒルかを判別したい画像は以下を用意しています

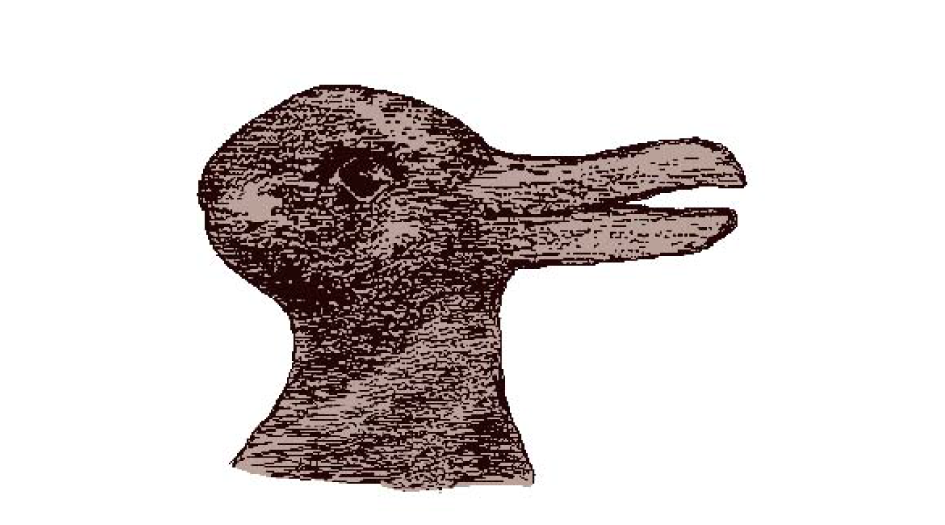

test1.png

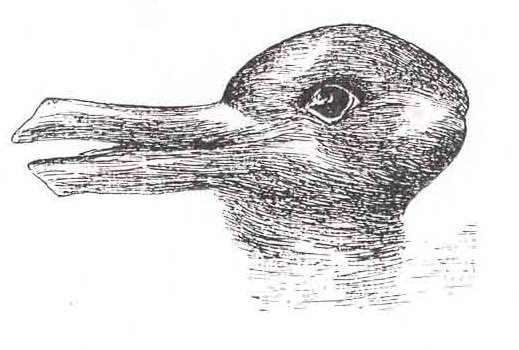

test2.jpg

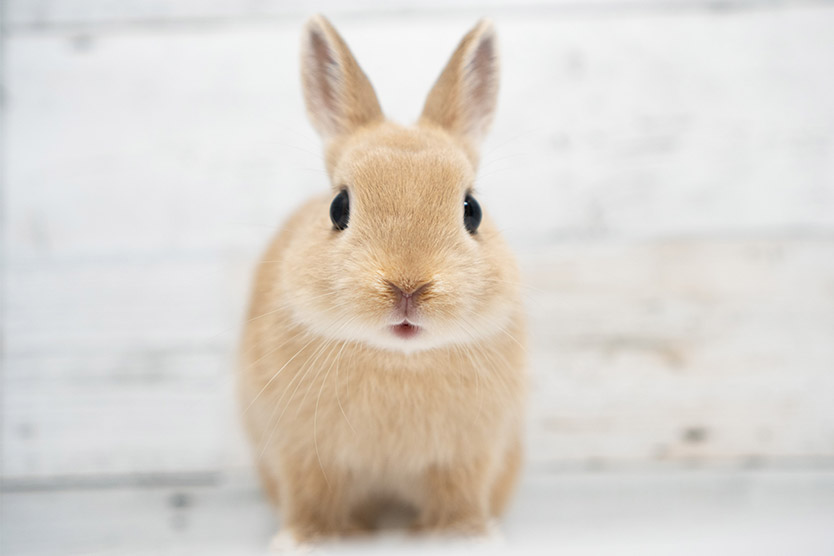

test3.jpg

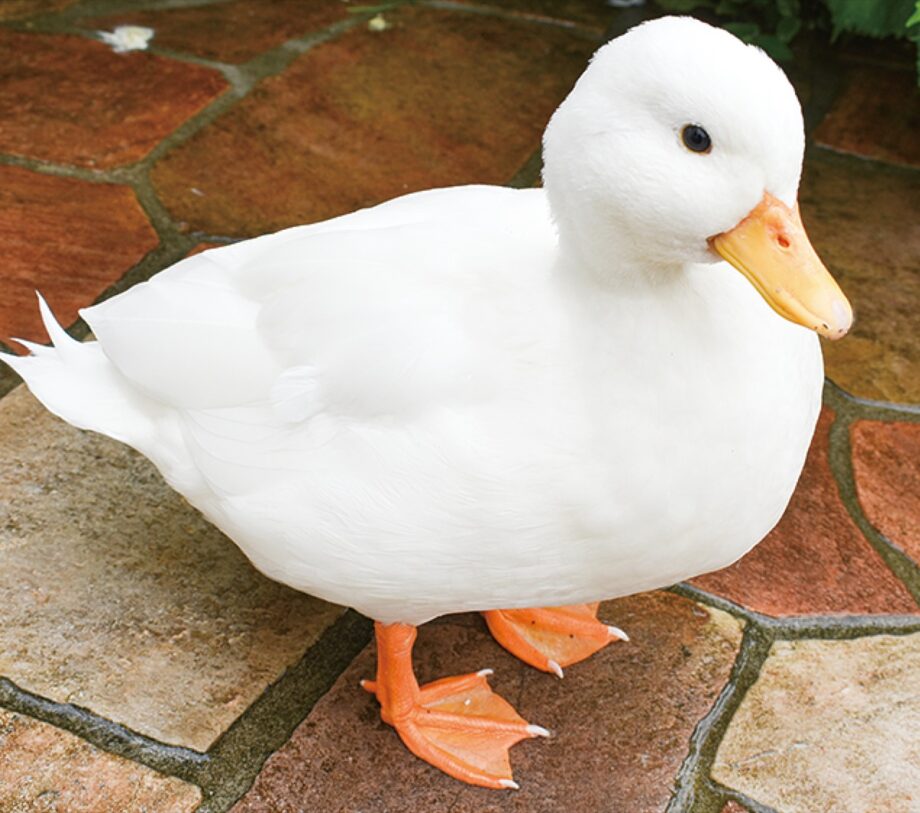

test4.jpg

test5.jpg

test6.jpg

結果は以下のようになりました

| test1.png | duck |

| test2.jpg | duck |

| test3.jpg | duck |

| test4.jpg | rabbit |

| test5.jpg | rabbit |

| test6.jpg | duck |

結論

AIには双安定図形はアヒルにしか見えないらしい