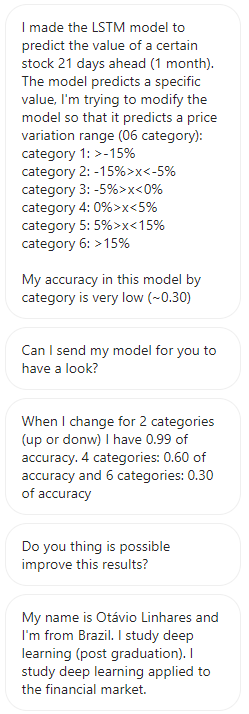

DM 来了,说:“你能提高LSTM模型的准确性吗?

我们将回答如何提高实际准确性。

简而言之

雅虎金融公司预测了巴西博尔萨巴尔考公司的股票。

每次增加变化率的条件分支时,精度都会下降,因此请考虑模型。

这是一个要求。

现在,让我们来看看如何真正提高准确性。

当预测精度不高时,您可以采取哪些方法?

我以前在文章里做过

提高精度也是基本知识。

通常,当预测精度差时,尝试以下操作:

过度拟合时

收集更多培训数据

减少特征量 n

增加正则化系数 λ

安装不足时

增加特征量 n

添加高级功能

减少正则化系数 λ

环境

WSL2

・Jupyter Notebook

* 发送它的人似乎没有问题,在科拉布,因为它是科拉布环境

发送的源代码

我希望提高预测的准确性。

实际的源代码已经发送。

让我们先破译源代码。

信息是开放的,我认为没有问题,即使我透露它,因为它是学习。

从这里开始

准备数据

安装软件包

!pip install tensorflow-gpu

!pip install yfinance --upgrade --no-cache-dir

!pip install pandas_datareader导入

import pandas_datareader.data as web

from datetime import date, timedelta

import pandas as pd

from numpy import array

from keras.models import Sequential

from keras.layers import LSTM

from keras.layers import Dense

import matplotlib.pyplot as plt

import tensorflow as tf

from tensorflow import keras

from tensorflow.keras.layers import Dropout

import yfinance as yf

yf.pdr_override()收购巴西博尔萨巴尔考2000年至2022年的股票价格

b3sa3 = web.get_data_yahoo("B3SA3.SA",start="2000-01-01", end="2022-12-31",interval="1d")设置测试数据和边界

hj=date.today()

td0 = timedelta(1)

td1 = timedelta(800)

td2 = timedelta(21)

end = hj-td2

start = end-td1

yesterday=hj-td0

print(start, end, hj)

b3sa3_test = web.get_data_yahoo("B3SA3.SA",start=start, end=end,interval="1d")

b3sa3_hj = web.get_data_yahoo("B3SA3.SA",start=yesterday, end=hj,interval="1d")

b3sa3_test = b3sa3_test.tail(365)测试数据

b3sa3_test分类中的决策边界

b3sa3_hj前五行数据检查

b3sa3.head()最后五行数据检查

b3sa3.tail()空检查

b3sa3.isnull().sum()数据信息确认

b3sa3.info()对于两类分类

模型

# split a univariate sequence into samples

def split_sequence(sequence, n_steps, avanco):

X, y = list(), list()

for i in range(len(sequence)):

# find the end of this pattern

end_ix = i + n_steps

z=end_ix-1

# check if we are beyond the sequence

if end_ix+avanco > len(sequence)-1:

break

# gather input and output parts of the pattern

seq_x, seq_y = sequence[i:end_ix], sequence[end_ix+avanco]

e=seq_y

f=seq_x[-1]

faixa = ((e/f)-1)*100

if faixa <0:

faixa=-1

else:

faixa=1

X.append(seq_x)

y.append(faixa)

return array(X), array(y)

# define input sequence

raw_seq = b3sa3['Close']

# choose a number of time steps

n_steps = 365

avanco = 21

# split into samples

X, y = split_sequence(raw_seq, n_steps,avanco)

# reshape from [samples, timesteps] into [samples, timesteps, features]

n_features = 1

X2 = X.reshape((X.shape[0], X.shape[1], n_features))转换为两类分类二进制数组

num_classes = 2

y2 = keras.utils.to_categorical(y, num_classes)适合

# define model

model2 = Sequential()

model2.add(LSTM(100, activation='tanh'))

model2.add(Dense(2,activation='sigmoid'))

model2.compile(optimizer='adam', loss="categorical_crossentropy", metrics=['accuracy'])

checkpoint_filepath = '/checkpoint'

# fit model

model2.fit(X2, y2, validation_split=0.2, epochs=10)结果

Epoch 1/10 85/85 [==============================] - 11s 119ms/step - loss: 0.0246 - accuracy: 0.9926 - val_loss: 3.0749e-04 - val_accuracy: 1.0000 Epoch 2/10 85/85 [==============================] - 10s 118ms/step - loss: 1.7007e-04 - accuracy: 1.0000 - val_loss: 1.6888e-04 - val_accuracy: 1.0000 Epoch 3/10 85/85 [==============================] - 10s 120ms/step - loss: 9.2416e-05 - accuracy: 1.0000 - val_loss: 1.0388e-04 - val_accuracy: 1.0000 Epoch 4/10 85/85 [==============================] - 10s 121ms/step - loss: 5.6772e-05 - accuracy: 1.0000 - val_loss: 7.2730e-05 - val_accuracy: 1.0000 Epoch 5/10 85/85 [==============================] - 10s 121ms/step - loss: 3.9265e-05 - accuracy: 1.0000 - val_loss: 5.5672e-05 - val_accuracy: 1.0000 Epoch 6/10 85/85 [==============================] - 10s 122ms/step - loss: 2.9215e-05 - accuracy: 1.0000 - val_loss: 4.4618e-05 - val_accuracy: 1.0000 Epoch 7/10 85/85 [==============================] - 10s 115ms/step - loss: 2.2800e-05 - accuracy: 1.0000 - val_loss: 3.6798e-05 - val_accuracy: 1.0000 Epoch 8/10 85/85 [==============================] - 10s 116ms/step - loss: 1.8407e-05 - accuracy: 1.0000 - val_loss: 3.0964e-05 - val_accuracy: 1.0000 Epoch 9/10 85/85 [==============================] - 10s 118ms/step - loss: 1.5248e-05 - accuracy: 1.0000 - val_loss: 2.6502e-05 - val_accuracy: 1.0000 Epoch 10/10 85/85 [==============================] - 10s 118ms/step - loss: 1.2893e-05 - accuracy: 1.0000 - val_loss: 2.3007e-05 - val_accuracy: 1.0000

对于四类分类

模型

# split a univariate sequence into samples

def split_sequence(sequence, n_steps, avanco):

X, y =list(), list()

for i in range(len(sequence)):

# find the end of this pattern

end_ix = i + n_steps

z=end_ix-1

# check if we are beyond the sequence

if end_ix+avanco > len(sequence)-1:

break

# gather input and output parts of the pattern

seq_x, seq_y = sequence[i:end_ix], sequence[end_ix+avanco]

e=seq_y

f=seq_x[-1]

faixa = ((e/f)-1)*100

if faixa <-5:

faixa=-2

elif faixa <0:

faixa=-1

elif faixa <5:

faixa=1

elif faixa >5:

faixa=2

X.append(seq_x)

y.append(faixa)

return array(X), array(y)

# define input sequence

raw_seq = b3sa3['Close']

# choose a number of time steps

n_steps = 365

avanco = 21

# split into samples

X, y = split_sequence(raw_seq, n_steps,avanco)

# reshape from [samples, timesteps] into [samples, timesteps, features]

n_features = 1

X4 = X.reshape((X.shape[0], X.shape[1], n_features))转换为四类分类二进制数组

num_classes = 4

y4 = keras.utils.to_categorical(y, num_classes)适合

# define model

model4 = Sequential()

model4.add(LSTM(100, activation='tanh'))

model4.add(Dense(4,activation='sigmoid'))

model4.compile(optimizer='adam', loss="categorical_crossentropy", metrics=['accuracy'])

# fit model

model4.fit(X4, y4, validation_split=0.2, epochs=10)结果

Epoch 1/10

85/85 [==============================] - 11s 121ms/step - loss: 0.9769 - accuracy: 0.5952 - val_loss: 0.9037 - val_accuracy: 0.6356

Epoch 2/10

85/85 [==============================] - 10s 118ms/step - loss: 0.9510 - accuracy: 0.6063 - val_loss: 0.9116 - val_accuracy: 0.6356

Epoch 3/10

85/85 [==============================] - 10s 116ms/step - loss: 0.9541 - accuracy: 0.6063 - val_loss: 0.9150 - val_accuracy: 0.6356

Epoch 4/10

85/85 [==============================] - 10s 117ms/step - loss: 0.9476 - accuracy: 0.6063 - val_loss: 0.9061 - val_accuracy: 0.6356

Epoch 5/10

85/85 [==============================] - 10s 115ms/step - loss: 0.9522 - accuracy: 0.6063 - val_loss: 0.9117 - val_accuracy: 0.6356

Epoch 6/10

85/85 [==============================] - 10s 114ms/step - loss: 0.9493 - accuracy: 0.6063 - val_loss: 0.9010 - val_accuracy: 0.6356

Epoch 7/10

85/85 [==============================] - 10s 114ms/step - loss: 0.9497 - accuracy: 0.6063 - val_loss: 0.9049 - val_accuracy: 0.6356

Epoch 8/10

85/85 [==============================] - 10s 118ms/step - loss: 0.9449 - accuracy: 0.6063 - val_loss: 0.9087 - val_accuracy: 0.6356

Epoch 9/10

85/85 [==============================] - 10s 119ms/step - loss: 0.9478 - accuracy: 0.6063 - val_loss: 0.9058 - val_accuracy: 0.6356

Epoch 10/10

85/85 [==============================] - 10s 119ms/step - loss: 0.9452 - accuracy: 0.6063 - val_loss: 0.9055 - val_accuracy: 0.6356

对于六类分类

模型

def split_sequence(sequence, n_steps, avanco):

X, y = list(), list()

for i in range(len(sequence)):

# find the end of this pattern

end_ix = i + n_steps

z=end_ix-1

# check if we are beyond the sequence

if end_ix+avanco > len(sequence)-1:

break

# gather input and output parts of the pattern

seq_x, seq_y = sequence[i:end_ix], sequence[end_ix+avanco]

e=seq_y

f=seq_x[-1]

faixa = ((e/f)-1)*100

if faixa <-15:

faixa=-3

elif faixa <-5:

faixa=-2

elif faixa <0:

faixa=-1

elif faixa <5:

faixa=1

elif faixa <15:

faixa=2

elif faixa >15:

faixa=3

X.append(seq_x)

y.append(faixa)

return array(X), array(y)

# define input sequence

raw_seq = b3sa3['Close']

# choose a number of time steps

n_steps = 365

avanco = 21

# split into samples

X, y = split_sequence(raw_seq, n_steps,avanco)

# reshape from [samples, timesteps] into [samples, timesteps, features]

n_features = 1

X6 = X.reshape((X.shape[0], X.shape[1], n_features))转换为 6 类分类二进制数组

num_classes = 6

y6 = keras.utils.to_categorical(y, num_classes)适合

# define model

model6 = Sequential()

model6.add(LSTM(100, activation='tanh'))

model6.add(Dense(6,activation='sigmoid'))

model6.compile(optimizer='adam', loss="categorical_crossentropy", metrics=['accuracy'])

# fit model

model6.fit(X6, y6, validation_split=0.2, epochs=10)结果

85/85 [==============================] - 11s 113ms/step - loss: 1.5843 - accuracy: 0.2848 - val_loss: 1.6382 - val_accuracy: 0.1689

Epoch 2/10

85/85 [==============================] - 9s 111ms/step - loss: 1.5677 - accuracy: 0.2800 - val_loss: 1.6800 - val_accuracy: 0.1689

Epoch 3/10

85/85 [==============================] - 9s 111ms/step - loss: 1.5623 - accuracy: 0.2941 - val_loss: 1.6359 - val_accuracy: 0.1689

Epoch 4/10

85/85 [==============================] - 9s 111ms/step - loss: 1.5513 - accuracy: 0.2963 - val_loss: 1.6746 - val_accuracy: 0.1748

Epoch 5/10

85/85 [==============================] - 9s 111ms/step - loss: 1.5483 - accuracy: 0.3078 - val_loss: 1.6489 - val_accuracy: 0.2356

Epoch 6/10

85/85 [==============================] - 9s 110ms/step - loss: 1.5505 - accuracy: 0.2952 - val_loss: 1.6026 - val_accuracy: 0.2193

Epoch 7/10

85/85 [==============================] - 9s 111ms/step - loss: 1.5391 - accuracy: 0.3200 - val_loss: 1.6281 - val_accuracy: 0.2504

Epoch 8/10

85/85 [==============================] - 10s 114ms/step - loss: 1.5384 - accuracy: 0.3230 - val_loss: 1.6312 - val_accuracy: 0.1911

Epoch 9/10

85/85 [==============================] - 10s 117ms/step - loss: 1.5185 - accuracy: 0.3330 - val_loss: 1.6505 - val_accuracy: 0.2430

Epoch 10/10

85/85 [==============================] - 10s 116ms/step - loss: 1.5229 - accuracy: 0.3274 - val_loss: 1.6121 - val_accuracy: 0.2533• 到目前为止

正如您所看到的,每次有更多类时,您都会发现准确性较低。

我想把这些接近100%。

实际提高精度

首先,尝试六类分类模式

如果精度提高,请反映四类分类。

我尝试了很多,但答案很简单。

尝试和结果如下

– 简单地增加数据量 → 我们只有2007年的股票数据, 所以根本无法增加数据。

– 尝试增加功能量(除了“关闭”之外,还包括“打开”、“高”和“低”)→ 精度保持不变。

增加n_steps → 准确性保持不变。

– 尝试不同的参数值, → 精度保持不变。

使用 RNN → 精度下降

增加 epoch 数量 → 小时,但精度从 0.2533 增加到 0.4655

首先,由于其他类分类, 丹塞的活动被改为软最大值, 而不是 sigmoid→ 出于某种原因,精度保持不变。

结果

此外,我尝试了各种,但0.4655是限制在6类分类。

但是,在相同的 epoch 数和模型中尝试了四类分类,精度从 0.6063 上升到 0.7104。

是0.8或更多是好的,虽然

因为股票是一个不规则的领域。

分析数据可能足够准确。

最终更改

• 通过拖放防止过度学习

• 将丹塞的活动从模式更改为软最大值,因为它是其他类分类

增加10个电子波

Before

model = Sequential()

model.add(LSTM(100, activation='tanh'))

model.add(Dense(num_classes, activation='sigmoid'))

model.compile(optimizer='adam', loss="categorical_crossentropy", metrics=['accuracy'])

model6.fit(X, y, validation_split=0.2, epochs=10)After

model = Sequential()

model.add(LSTM(units=128, input_shape=(n_steps, n_features)))

model.add(Dropout(0.2))

model.add(Dense(units=num_classes, activation='softmax'))

model.compile(optimizer='adam', loss='categorical_crossentropy', metrics=['accuracy'])

model.fit(X6, y6, batch_size=32, epochs=100, validation_split=0.2)摘要

这一次,我们通过简单地增加 epoch 的数量来提高准确性。

机器学习

人对性能和质量的改变是必不可少的。

准确性可能是最可衡量的指标。

此外,为了提高精度,

寻找更好的算法

工程师需要好奇心和好奇心。

请求正在请求中

我们正在寻找您的请求和问题。