!pip install tensorflow-gpu

!pip install yfinance --upgrade --no-cache-dir

!pip install pandas_datareader

!pip install sklearn

!pip install -U protobuf==3.8.0import math

import pandas_datareader.data as web

from datetime import date, timedelta

import pandas as pd

from numpy import array, reshape

from keras.models import Sequential

from keras.layers import LSTM, SimpleRNN, Dropout, Dense

import matplotlib.pyplot as plt

from sklearn.metrics import accuracy_score

from sklearn.preprocessing import MinMaxScaler

from sklearn.metrics import mean_absolute_error

from sklearn.model_selection import train_test_split

from keras.callbacks import EarlyStopping

import tensorflow as tf

from tensorflow import keras

from tensorflow.keras.layers import Dropout

import yfinance as yf

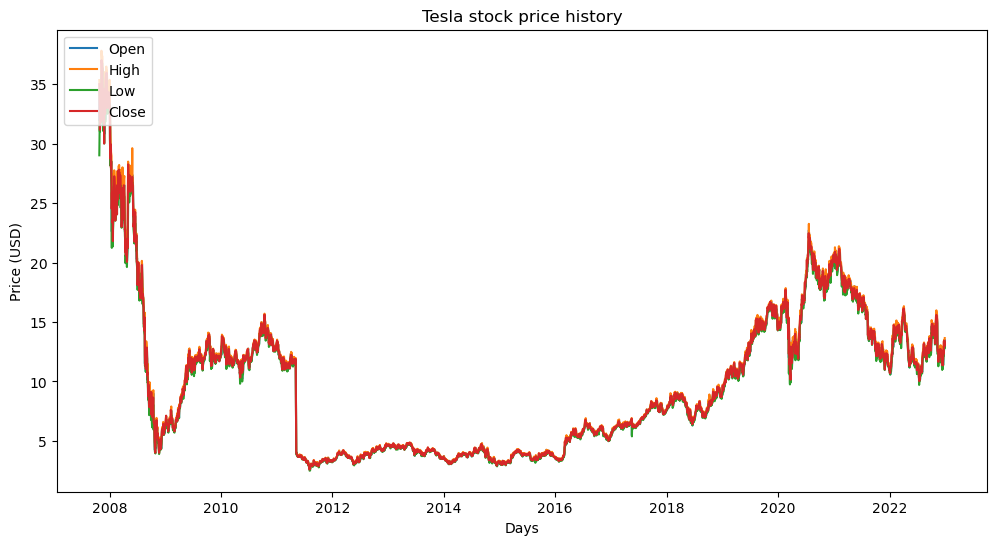

yf.pdr_override()b3sa3 = web.get_data_yahoo("B3SA3.SA",start="2000-01-01", end="2022-12-31")plt.figure(figsize = (12,6))

plt.plot(b3sa3["Open"])

plt.plot(b3sa3["High"])

plt.plot(b3sa3["Low"])

plt.plot(b3sa3["Close"])

plt.title('Tesla stock price history')

plt.ylabel('Price (USD)')

plt.xlabel('Days')

plt.legend(['Open','High','Low','Close'], loc='upper left')

plt.show()plt.figure(figsize = (12,6))

plt.plot(b3sa3["Open"])

plt.plot(b3sa3["High"])

plt.plot(b3sa3["Low"])

plt.plot(b3sa3["Close"])

plt.title('Tesla stock price history')

plt.ylabel('Price (USD)')

plt.xlabel('Days')

plt.legend(['Open','High','Low','Close'], loc='upper left')

plt.show()plt.figure(figsize = (12,6))

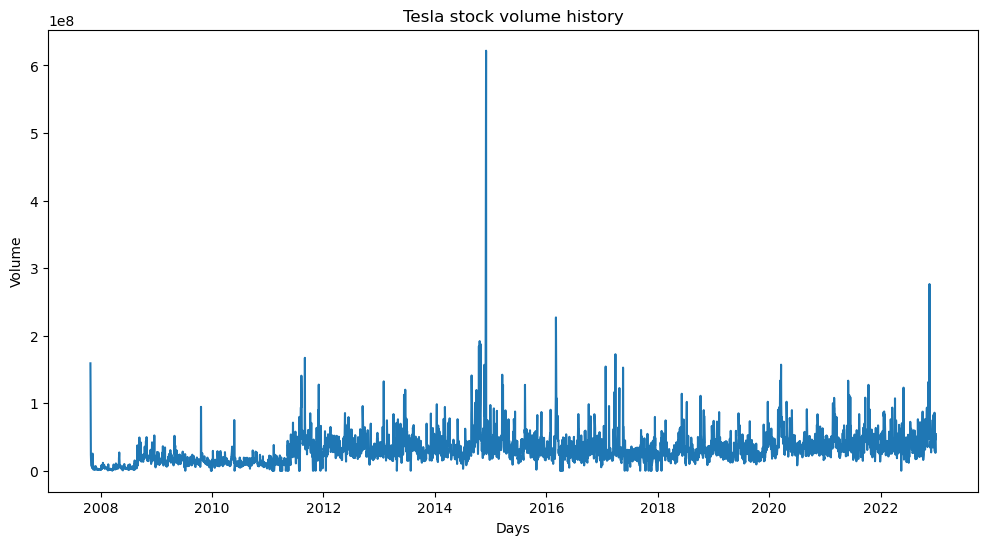

plt.plot(b3sa3["Volume"])

plt.title('Tesla stock volume history')

plt.ylabel('Volume')

plt.xlabel('Days')

plt.show()data_target = b3sa3.filter(['Close'])

target = data_target.values

training_data_len = math.ceil(len(target)* 0.75)

training_data_len

sc = MinMaxScaler(feature_range=(0,1))

training_scaled_data = sc.fit_transform(target)

training_scaled_data# split a univariate sequence into samples

def split_sequence(sequence, n_steps, avanco):

X, y = list(), list()

for i in range(len(sequence)):

# find the end of this pattern

end_ix = i + n_steps

z=end_ix-1

# check if we are beyond the sequence

if end_ix+avanco > len(sequence)-1:

break

# gather input and output parts of the pattern

seq_x, seq_y = sequence[i:end_ix], sequence[end_ix+avanco]

e=seq_y

f=seq_x[-1]

faixa = ((e/f)-1)*100

if faixa <-15:

faixa=-3

elif faixa <-5:

faixa=-2

elif faixa <0:

faixa=-1

elif faixa <5:

faixa=1

elif faixa <15:

faixa=2

elif faixa >15:

faixa=3

X.append(seq_x)

print(faixa)

y.append(faixa)

return array(X), array(y)train_data = training_scaled_data[0:training_data_len , : ]

X_train = []

y_train = []

for i in range(180, len(train_data)):

X_train.append(train_data[i-180:i, 0])

y_train.append(train_data[i, 0])

print(X_train)

X_train, y_train = array(X_train), array(y_train)

X_train = reshape(X_train, (X_train.shape[0], X_train.shape[1], 1))

print('Number of rows and columns: ', X_train.shape)model = Sequential()

#Adding the first LSTM layer and some Dropout regularisation

model.add(LSTM(units = 50, return_sequences = True, input_shape = (X_train.shape[1], 1)))

model.add(Dropout(0.2))

# Adding a second LSTM layer and some Dropout regularisation

model.add(LSTM(units = 50, return_sequences = True))

model.add(Dropout(0.2))

# Adding a third LSTM layer and some Dropout regularisation

model.add(LSTM(units = 50, return_sequences = True))

model.add(Dropout(0.2))

# Adding a fourth LSTM layer and some Dropout regularisation

model.add(LSTM(units = 50))

model.add(Dropout(0.2))

# Adding the output layer

model.add(Dense(units = 1))

# Compiling

model.compile(optimizer='adam', loss='mean_squared_error', metrics=['mae', 'acc'])

# Fitting the RNN to the Training set

model.fit(X_train, y_train, epochs = 100, batch_size = 32)Epoch 1/100

83/83 [==============================] - 19s 164ms/step - loss: 0.0022 - mae: 0.0293 - acc: 3.7864e-04

Epoch 2/100

83/83 [==============================] - 14s 171ms/step - loss: 7.5855e-04 - mae: 0.0168 - acc: 3.7864e-04

Epoch 3/100

83/83 [==============================] - 15s 183ms/step - loss: 6.4637e-04 - mae: 0.0156 - acc: 3.7864e-04

Epoch 4/100

83/83 [==============================] - 15s 176ms/step - loss: 6.5974e-04 - mae: 0.0156 - acc: 3.7864e-04

Epoch 5/100

83/83 [==============================] - 15s 178ms/step - loss: 5.6503e-04 - mae: 0.0149 - acc: 3.7864e-04

Epoch 6/100

83/83 [==============================] - 15s 179ms/step - loss: 5.4539e-04 - mae: 0.0154 - acc: 3.7864e-04

Epoch 7/100

83/83 [==============================] - 15s 179ms/step - loss: 4.2728e-04 - mae: 0.0131 - acc: 3.7864e-04

Epoch 8/100

83/83 [==============================] - 15s 180ms/step - loss: 4.4834e-04 - mae: 0.0133 - acc: 3.7864e-04

Epoch 9/100

83/83 [==============================] - 15s 184ms/step - loss: 4.4234e-04 - mae: 0.0130 - acc: 3.7864e-04

Epoch 10/100

83/83 [==============================] - 15s 186ms/step - loss: 4.5409e-04 - mae: 0.0139 - acc: 3.7864e-04

Epoch 11/100

83/83 [==============================] - 16s 197ms/step - loss: 4.1856e-04 - mae: 0.0127 - acc: 3.7864e-04

Epoch 12/100

83/83 [==============================] - 16s 187ms/step - loss: 4.0557e-04 - mae: 0.0131 - acc: 3.7864e-04

Epoch 13/100

83/83 [==============================] - 15s 185ms/step - loss: 3.6888e-04 - mae: 0.0120 - acc: 3.7864e-04

Epoch 14/100

83/83 [==============================] - 15s 186ms/step - loss: 3.8366e-04 - mae: 0.0127 - acc: 3.7864e-04

Epoch 15/100

83/83 [==============================] - 16s 189ms/step - loss: 3.4191e-04 - mae: 0.0118 - acc: 3.7864e-04

Epoch 16/100

83/83 [==============================] - 15s 178ms/step - loss: 3.4022e-04 - mae: 0.0118 - acc: 3.7864e-04

Epoch 17/100

83/83 [==============================] - 15s 175ms/step - loss: 3.4134e-04 - mae: 0.0122 - acc: 3.7864e-04

Epoch 18/100

83/83 [==============================] - 15s 178ms/step - loss: 3.3549e-04 - mae: 0.0118 - acc: 3.7864e-04

Epoch 19/100

83/83 [==============================] - 15s 179ms/step - loss: 3.3823e-04 - mae: 0.0120 - acc: 3.7864e-04

Epoch 20/100

83/83 [==============================] - 15s 179ms/step - loss: 2.7471e-04 - mae: 0.0105 - acc: 3.7864e-04

Epoch 21/100

83/83 [==============================] - 15s 184ms/step - loss: 3.1111e-04 - mae: 0.0114 - acc: 3.7864e-04

Epoch 22/100

83/83 [==============================] - 15s 182ms/step - loss: 2.7048e-04 - mae: 0.0108 - acc: 3.7864e-04

Epoch 23/100

83/83 [==============================] - 15s 177ms/step - loss: 2.6737e-04 - mae: 0.0106 - acc: 3.7864e-04

Epoch 24/100

83/83 [==============================] - 12s 143ms/step - loss: 3.0102e-04 - mae: 0.0114 - acc: 3.7864e-04

Epoch 25/100

83/83 [==============================] - 12s 148ms/step - loss: 2.7399e-04 - mae: 0.0109 - acc: 3.7864e-04

Epoch 26/100

83/83 [==============================] - 12s 143ms/step - loss: 2.8185e-04 - mae: 0.0110 - acc: 3.7864e-04

Epoch 27/100

83/83 [==============================] - 12s 143ms/step - loss: 2.7066e-04 - mae: 0.0108 - acc: 3.7864e-04

Epoch 28/100

83/83 [==============================] - 12s 141ms/step - loss: 2.4524e-04 - mae: 0.0104 - acc: 3.7864e-04

Epoch 29/100

83/83 [==============================] - 12s 140ms/step - loss: 2.4877e-04 - mae: 0.0104 - acc: 3.7864e-04

Epoch 30/100

83/83 [==============================] - 12s 140ms/step - loss: 2.5145e-04 - mae: 0.0108 - acc: 3.7864e-04

Epoch 31/100

83/83 [==============================] - 12s 142ms/step - loss: 2.5053e-04 - mae: 0.0104 - acc: 3.7864e-04

Epoch 32/100

83/83 [==============================] - 12s 144ms/step - loss: 2.5941e-04 - mae: 0.0107 - acc: 3.7864e-04

Epoch 33/100

83/83 [==============================] - 12s 145ms/step - loss: 2.1628e-04 - mae: 0.0096 - acc: 3.7864e-04

Epoch 34/100

83/83 [==============================] - 12s 148ms/step - loss: 2.5347e-04 - mae: 0.0104 - acc: 3.7864e-04

Epoch 35/100

83/83 [==============================] - 12s 144ms/step - loss: 2.8450e-04 - mae: 0.0117 - acc: 3.7864e-04

Epoch 36/100

83/83 [==============================] - 12s 147ms/step - loss: 2.1696e-04 - mae: 0.0097 - acc: 3.7864e-04

Epoch 37/100

83/83 [==============================] - 12s 141ms/step - loss: 2.1587e-04 - mae: 0.0099 - acc: 3.7864e-04

Epoch 38/100

83/83 [==============================] - 12s 144ms/step - loss: 2.1839e-04 - mae: 0.0099 - acc: 3.7864e-04

Epoch 39/100

83/83 [==============================] - 12s 145ms/step - loss: 2.1115e-04 - mae: 0.0099 - acc: 3.7864e-04

Epoch 40/100

83/83 [==============================] - 12s 142ms/step - loss: 1.9515e-04 - mae: 0.0094 - acc: 3.7864e-04

Epoch 41/100

83/83 [==============================] - 12s 146ms/step - loss: 2.3016e-04 - mae: 0.0102 - acc: 3.7864e-04

Epoch 42/100

83/83 [==============================] - 12s 142ms/step - loss: 2.1981e-04 - mae: 0.0099 - acc: 3.7864e-04

Epoch 43/100

83/83 [==============================] - 12s 143ms/step - loss: 2.2498e-04 - mae: 0.0102 - acc: 3.7864e-04

Epoch 44/100

83/83 [==============================] - 12s 143ms/step - loss: 2.5379e-04 - mae: 0.0109 - acc: 3.7864e-04

Epoch 45/100

83/83 [==============================] - 12s 143ms/step - loss: 2.1705e-04 - mae: 0.0099 - acc: 3.7864e-04

Epoch 46/100

83/83 [==============================] - 12s 140ms/step - loss: 2.2145e-04 - mae: 0.0103 - acc: 3.7864e-04

Epoch 47/100

83/83 [==============================] - 12s 139ms/step - loss: 1.9548e-04 - mae: 0.0092 - acc: 3.7864e-04

Epoch 48/100

83/83 [==============================] - 12s 141ms/step - loss: 2.0833e-04 - mae: 0.0095 - acc: 3.7864e-04

Epoch 49/100

83/83 [==============================] - 12s 141ms/step - loss: 1.9410e-04 - mae: 0.0095 - acc: 3.7864e-04

Epoch 50/100

83/83 [==============================] - 12s 142ms/step - loss: 1.9222e-04 - mae: 0.0094 - acc: 3.7864e-04

Epoch 51/100

83/83 [==============================] - 12s 139ms/step - loss: 2.2112e-04 - mae: 0.0103 - acc: 3.7864e-04

Epoch 52/100

83/83 [==============================] - 12s 141ms/step - loss: 1.9559e-04 - mae: 0.0095 - acc: 3.7864e-04

Epoch 53/100

83/83 [==============================] - 12s 142ms/step - loss: 2.0208e-04 - mae: 0.0096 - acc: 3.7864e-04

Epoch 54/100

83/83 [==============================] - 12s 141ms/step - loss: 1.8062e-04 - mae: 0.0092 - acc: 3.7864e-04

Epoch 55/100

83/83 [==============================] - 12s 142ms/step - loss: 1.9474e-04 - mae: 0.0095 - acc: 3.7864e-04

Epoch 56/100

83/83 [==============================] - 12s 141ms/step - loss: 2.0758e-04 - mae: 0.0096 - acc: 3.7864e-04

Epoch 57/100

83/83 [==============================] - 12s 142ms/step - loss: 1.9136e-04 - mae: 0.0093 - acc: 3.7864e-04

Epoch 58/100

83/83 [==============================] - 12s 141ms/step - loss: 2.1050e-04 - mae: 0.0099 - acc: 3.7864e-04

Epoch 59/100

83/83 [==============================] - 12s 141ms/step - loss: 2.2446e-04 - mae: 0.0104 - acc: 3.7864e-04

Epoch 60/100

83/83 [==============================] - 12s 142ms/step - loss: 2.1025e-04 - mae: 0.0099 - acc: 3.7864e-04

Epoch 61/100

83/83 [==============================] - 12s 142ms/step - loss: 1.9358e-04 - mae: 0.0095 - acc: 3.7864e-04

Epoch 62/100

83/83 [==============================] - 12s 143ms/step - loss: 1.9089e-04 - mae: 0.0094 - acc: 3.7864e-04

Epoch 63/100

83/83 [==============================] - 12s 143ms/step - loss: 2.0180e-04 - mae: 0.0097 - acc: 3.7864e-04

Epoch 64/100

83/83 [==============================] - 12s 143ms/step - loss: 1.9502e-04 - mae: 0.0095 - acc: 3.7864e-04

Epoch 65/100

83/83 [==============================] - 12s 142ms/step - loss: 1.9852e-04 - mae: 0.0095 - acc: 3.7864e-04

Epoch 66/100

83/83 [==============================] - 12s 141ms/step - loss: 2.0266e-04 - mae: 0.0100 - acc: 3.7864e-04

Epoch 67/100

83/83 [==============================] - 12s 143ms/step - loss: 1.8904e-04 - mae: 0.0091 - acc: 3.7864e-04

Epoch 68/100

83/83 [==============================] - 12s 142ms/step - loss: 2.0111e-04 - mae: 0.0097 - acc: 3.7864e-04

Epoch 69/100

83/83 [==============================] - 12s 144ms/step - loss: 1.9283e-04 - mae: 0.0095 - acc: 3.7864e-04

Epoch 70/100

83/83 [==============================] - 12s 146ms/step - loss: 1.9512e-04 - mae: 0.0095 - acc: 3.7864e-04

Epoch 71/100

83/83 [==============================] - 12s 144ms/step - loss: 1.7657e-04 - mae: 0.0091 - acc: 3.7864e-04

Epoch 72/100

83/83 [==============================] - 12s 145ms/step - loss: 1.7844e-04 - mae: 0.0091 - acc: 3.7864e-04

Epoch 73/100

83/83 [==============================] - 12s 144ms/step - loss: 2.1166e-04 - mae: 0.0098 - acc: 3.7864e-04

Epoch 74/100

83/83 [==============================] - 12s 145ms/step - loss: 1.6239e-04 - mae: 0.0088 - acc: 3.7864e-04

Epoch 75/100

83/83 [==============================] - 12s 145ms/step - loss: 2.0147e-04 - mae: 0.0097 - acc: 3.7864e-04

Epoch 76/100

83/83 [==============================] - 12s 143ms/step - loss: 1.8721e-04 - mae: 0.0095 - acc: 3.7864e-04

Epoch 77/100

83/83 [==============================] - 12s 144ms/step - loss: 1.7040e-04 - mae: 0.0091 - acc: 3.7864e-04

Epoch 78/100

83/83 [==============================] - 12s 144ms/step - loss: 1.7755e-04 - mae: 0.0091 - acc: 3.7864e-04

Epoch 79/100

83/83 [==============================] - 12s 143ms/step - loss: 1.8537e-04 - mae: 0.0091 - acc: 3.7864e-04

Epoch 80/100

83/83 [==============================] - 12s 143ms/step - loss: 1.7636e-04 - mae: 0.0089 - acc: 3.7864e-04

Epoch 81/100

83/83 [==============================] - 12s 142ms/step - loss: 1.7798e-04 - mae: 0.0092 - acc: 3.7864e-04

Epoch 82/100

83/83 [==============================] - 12s 144ms/step - loss: 1.7796e-04 - mae: 0.0092 - acc: 3.7864e-04

Epoch 83/100

83/83 [==============================] - 12s 145ms/step - loss: 1.7401e-04 - mae: 0.0090 - acc: 3.7864e-04

Epoch 84/100

83/83 [==============================] - 12s 145ms/step - loss: 2.0091e-04 - mae: 0.0096 - acc: 3.7864e-04

Epoch 85/100

83/83 [==============================] - 12s 144ms/step - loss: 1.7121e-04 - mae: 0.0089 - acc: 3.7864e-04

Epoch 86/100

83/83 [==============================] - 12s 144ms/step - loss: 1.8250e-04 - mae: 0.0092 - acc: 3.7864e-04

Epoch 87/100

83/83 [==============================] - 12s 144ms/step - loss: 1.7934e-04 - mae: 0.0094 - acc: 3.7864e-04

Epoch 88/100

83/83 [==============================] - 12s 145ms/step - loss: 1.6739e-04 - mae: 0.0087 - acc: 3.7864e-04

Epoch 89/100

83/83 [==============================] - 12s 144ms/step - loss: 1.7841e-04 - mae: 0.0090 - acc: 3.7864e-04

Epoch 90/100

83/83 [==============================] - 12s 144ms/step - loss: 1.7798e-04 - mae: 0.0091 - acc: 3.7864e-04

Epoch 91/100

83/83 [==============================] - 12s 145ms/step - loss: 2.0648e-04 - mae: 0.0099 - acc: 3.7864e-04

Epoch 92/100

83/83 [==============================] - 12s 144ms/step - loss: 1.6237e-04 - mae: 0.0087 - acc: 3.7864e-04

Epoch 93/100

83/83 [==============================] - 12s 144ms/step - loss: 1.5727e-04 - mae: 0.0086 - acc: 3.7864e-04

Epoch 94/100

83/83 [==============================] - 12s 146ms/step - loss: 1.7447e-04 - mae: 0.0091 - acc: 3.7864e-04

Epoch 95/100

83/83 [==============================] - 12s 144ms/step - loss: 1.9625e-04 - mae: 0.0095 - acc: 3.7864e-04

Epoch 96/100

83/83 [==============================] - 12s 144ms/step - loss: 1.6939e-04 - mae: 0.0089 - acc: 3.7864e-04

Epoch 97/100

83/83 [==============================] - 12s 145ms/step - loss: 1.6023e-04 - mae: 0.0084 - acc: 3.7864e-04

Epoch 98/100

83/83 [==============================] - 12s 143ms/step - loss: 1.7082e-04 - mae: 0.0088 - acc: 3.7864e-04

Epoch 99/100

83/83 [==============================] - 12s 143ms/step - loss: 1.7704e-04 - mae: 0.0091 - acc: 3.7864e-04

Epoch 100/100

83/83 [==============================] - 12s 142ms/step - loss: 1.7186e-04 - mae: 0.0090 - acc: 3.7864e-04

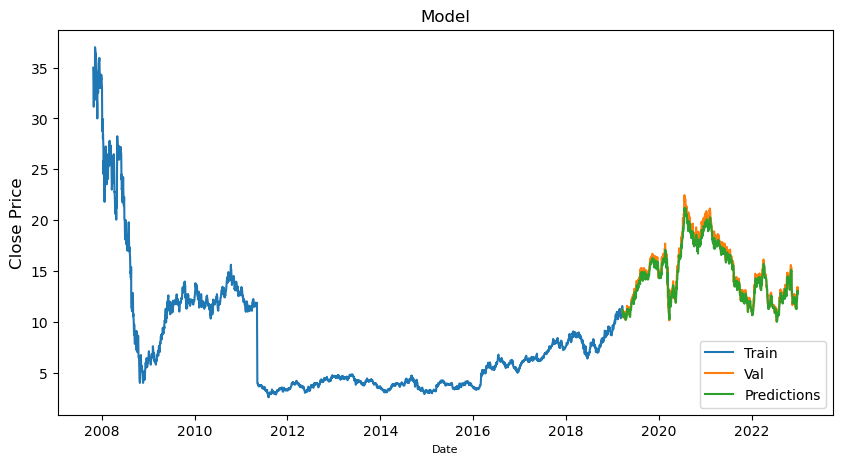

# Getting the predicted stock price

test_data = training_scaled_data[training_data_len - 180: , : ]

#Create the x_test and y_test data sets

X_test = []

y_test = target[training_data_len : , : ]

for i in range(180,len(test_data)):

X_test.append(test_data[i-180:i,0])

# Convert x_test to a numpy array

X_test = array(X_test)

#Reshape the data into the shape accepted by the LSTM

X_test = reshape(X_test, (X_test.shape[0],X_test.shape[1],1))

print('Number of rows and columns: ', X_test.shape)# Making predictions using the test dataset

predicted_stock_price = model.predict(X_test)

predicted_stock_price = sc.inverse_transform(predicted_stock_price)# Visualising the results

train = data_target[:training_data_len]

valid = data_target[training_data_len:]

valid['Predictions'] = predicted_stock_price

plt.figure(figsize=(10,5))

plt.title('Model')

plt.xlabel('Date', fontsize=8)

plt.ylabel('Close Price', fontsize=12)

plt.plot(train['Close'])

plt.plot(valid[['Close', 'Predictions']])

plt.legend(['Train', 'Val', 'Predictions'], loc='lower right')

plt.show()