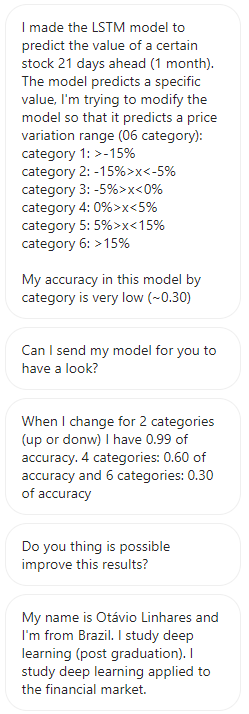

A DM came asking if you could improve the accuracy of the LSTM model.

We will answer how to improve the actual accuracy.

in a word

Yahoo Finance is forecasting the stock of a company called Brazil Bolsa Barcao.

The accuracy decreases as the conditional branch of the change rate increases, so I would like you to consider a model.

It is a request.

Let's take a look at how to improve accuracy in practice.

What to do when prediction accuracy is not possible

I've written an article before.

There is also the basis for improving accuracy.

Usually, when the prediction accuracy is poor, the following is what to try.

When overfitting

・Collect more training data

・Reduce feature n

Increase the regularization coefficient λ

When underfitting

Increase the feature n

・ Add higher-order features

・Reduce the regularization coefficient λ

Environment

・WSL2

・Jupyter Notebook

* The person who sent it was a Colab environment, so it seems that there is no problem with Colab

Source code sent to you

I want you to improve the accuracy of your predictions.

The actual source code was sent to me.

Let's decipher the source code first.

The information is open and for study, so I don't think there is any problem in disclosing it.

↓ From here

Data Preparation

Install the package

!pip install tensorflow-gpu

!pip install yfinance --upgrade --no-cache-dir

!pip install pandas_datareaderImport

import pandas_datareader.data as web

from datetime import date, timedelta

import pandas as pd

from numpy import array

from keras.models import Sequential

from keras.layers import LSTM

from keras.layers import Dense

import matplotlib.pyplot as plt

import tensorflow as tf

from tensorflow import keras

from tensorflow.keras.layers import Dropout

import yfinance as yf

yf.pdr_override()Get the share price of Brazilian Bolsa Barcao from 2000 to 2022

b3sa3 = web.get_data_yahoo("B3SA3.SA",start="2000-01-01", end="2022-12-31",interval="1d")Set test data and boundaries

hj=date.today()

td0 = timedelta(1)

td1 = timedelta(800)

td2 = timedelta(21)

end = hj-td2

start = end-td1

yesterday=hj-td0

print(start, end, hj)

b3sa3_test = web.get_data_yahoo("B3SA3.SA",start=start, end=end,interval="1d")

b3sa3_hj = web.get_data_yahoo("B3SA3.SA",start=yesterday, end=hj,interval="1d")

b3sa3_test = b3sa3_test.tail(365)Test

b3sa3_testDecision boundaries in classification

b3sa3_hjData review for the first 5 rows

b3sa3.head()Check the data in the last 5 rows

b3sa3.tail()Null check

b3sa3.isnull().sum()Data information confirmation

b3sa3.info()In the case of two-class classification

Model

# split a univariate sequence into samples

def split_sequence(sequence, n_steps, avanco):

X, y = list(), list()

for i in range(len(sequence)):

# find the end of this pattern

end_ix = i + n_steps

z=end_ix-1

# check if we are beyond the sequence

if end_ix+avanco > len(sequence)-1:

break

# gather input and output parts of the pattern

seq_x, seq_y = sequence[i:end_ix], sequence[end_ix+avanco]

e=seq_y

f=seq_x[-1]

faixa = ((e/f)-1)*100

if faixa <0:

faixa=-1

else:

faixa=1

X.append(seq_x)

y.append(faixa)

return array(X), array(y)

# define input sequence

raw_seq = b3sa3['Close']

# choose a number of time steps

n_steps = 365

avanco = 21

# split into samples

X, y = split_sequence(raw_seq, n_steps,avanco)

# reshape from [samples, timesteps] into [samples, timesteps, features]

n_features = 1

X2 = X.reshape((X.shape[0], X.shape[1], n_features))Convert to a binary array classified by two classes

num_classes = 2

y2 = keras.utils.to_categorical(y, num_classes)Fitting

# define model

model2 = Sequential()

model2.add(LSTM(100, activation='tanh'))

model2.add(Dense(2,activation='sigmoid'))

model2.compile(optimizer='adam', loss="categorical_crossentropy", metrics=['accuracy'])

checkpoint_filepath = '/checkpoint'

# fit model

model2.fit(X2, y2, validation_split=0.2, epochs=10)Results

Epoch 1/10 85/85 [==============================] - 11s 119ms/step - loss: 0.0246 - accuracy: 0.9926 - val_loss: 3.0749e-04 - val_accuracy: 1.0000 Epoch 2/10 85/85 [==============================] - 10s 118ms/step - loss: 1.7007e-04 - accuracy: 1.0000 - val_loss: 1.6888e-04 - val_accuracy: 1.0000 Epoch 3/10 85/85 [==============================] - 10s 120ms/step - loss: 9.2416e-05 - accuracy: 1.0000 - val_loss: 1.0388e-04 - val_accuracy: 1.0000 Epoch 4/10 85/85 [==============================] - 10s 121ms/step - loss: 5.6772e-05 - accuracy: 1.0000 - val_loss: 7.2730e-05 - val_accuracy: 1.0000 Epoch 5/10 85/85 [==============================] - 10s 121ms/step - loss: 3.9265e-05 - accuracy: 1.0000 - val_loss: 5.5672e-05 - val_accuracy: 1.0000 Epoch 6/10 85/85 [==============================] - 10s 122ms/step - loss: 2.9215e-05 - accuracy: 1.0000 - val_loss: 4.4618e-05 - val_accuracy: 1.0000 Epoch 7/10 85/85 [==============================] - 10s 115ms/step - loss: 2.2800e-05 - accuracy: 1.0000 - val_loss: 3.6798e-05 - val_accuracy: 1.0000 Epoch 8/10 85/85 [==============================] - 10s 116ms/step - loss: 1.8407e-05 - accuracy: 1.0000 - val_loss: 3.0964e-05 - val_accuracy: 1.0000 Epoch 9/10 85/85 [==============================] - 10s 118ms/step - loss: 1.5248e-05 - accuracy: 1.0000 - val_loss: 2.6502e-05 - val_accuracy: 1.0000 Epoch 10/10 85/85 [==============================] - 10s 118ms/step - loss: 1.2893e-05 - accuracy: 1.0000 - val_loss: 2.3007e-05 - val_accuracy: 1.0000

In the case of 4 classes

Model

# split a univariate sequence into samples

def split_sequence(sequence, n_steps, avanco):

X, y =list(), list()

for i in range(len(sequence)):

# find the end of this pattern

end_ix = i + n_steps

z=end_ix-1

# check if we are beyond the sequence

if end_ix+avanco > len(sequence)-1:

break

# gather input and output parts of the pattern

seq_x, seq_y = sequence[i:end_ix], sequence[end_ix+avanco]

e=seq_y

f=seq_x[-1]

faixa = ((e/f)-1)*100

if faixa <-5:

faixa=-2

elif faixa <0:

faixa=-1

elif faixa <5:

faixa=1

elif faixa >5:

faixa=2

X.append(seq_x)

y.append(faixa)

return array(X), array(y)

# define input sequence

raw_seq = b3sa3['Close']

# choose a number of time steps

n_steps = 365

avanco = 21

# split into samples

X, y = split_sequence(raw_seq, n_steps,avanco)

# reshape from [samples, timesteps] into [samples, timesteps, features]

n_features = 1

X4 = X.reshape((X.shape[0], X.shape[1], n_features))Convert to a binary array classified into 4 classes

num_classes = 4

y4 = keras.utils.to_categorical(y, num_classes)Fitting

# define model

model4 = Sequential()

model4.add(LSTM(100, activation='tanh'))

model4.add(Dense(4,activation='sigmoid'))

model4.compile(optimizer='adam', loss="categorical_crossentropy", metrics=['accuracy'])

# fit model

model4.fit(X4, y4, validation_split=0.2, epochs=10)Results

Epoch 1/10

85/85 [==============================] - 11s 121ms/step - loss: 0.9769 - accuracy: 0.5952 - val_loss: 0.9037 - val_accuracy: 0.6356

Epoch 2/10

85/85 [==============================] - 10s 118ms/step - loss: 0.9510 - accuracy: 0.6063 - val_loss: 0.9116 - val_accuracy: 0.6356

Epoch 3/10

85/85 [==============================] - 10s 116ms/step - loss: 0.9541 - accuracy: 0.6063 - val_loss: 0.9150 - val_accuracy: 0.6356

Epoch 4/10

85/85 [==============================] - 10s 117ms/step - loss: 0.9476 - accuracy: 0.6063 - val_loss: 0.9061 - val_accuracy: 0.6356

Epoch 5/10

85/85 [==============================] - 10s 115ms/step - loss: 0.9522 - accuracy: 0.6063 - val_loss: 0.9117 - val_accuracy: 0.6356

Epoch 6/10

85/85 [==============================] - 10s 114ms/step - loss: 0.9493 - accuracy: 0.6063 - val_loss: 0.9010 - val_accuracy: 0.6356

Epoch 7/10

85/85 [==============================] - 10s 114ms/step - loss: 0.9497 - accuracy: 0.6063 - val_loss: 0.9049 - val_accuracy: 0.6356

Epoch 8/10

85/85 [==============================] - 10s 118ms/step - loss: 0.9449 - accuracy: 0.6063 - val_loss: 0.9087 - val_accuracy: 0.6356

Epoch 9/10

85/85 [==============================] - 10s 119ms/step - loss: 0.9478 - accuracy: 0.6063 - val_loss: 0.9058 - val_accuracy: 0.6356

Epoch 10/10

85/85 [==============================] - 10s 119ms/step - loss: 0.9452 - accuracy: 0.6063 - val_loss: 0.9055 - val_accuracy: 0.6356

6 Classification

Model

def split_sequence(sequence, n_steps, avanco):

X, y = list(), list()

for i in range(len(sequence)):

# find the end of this pattern

end_ix = i + n_steps

z=end_ix-1

# check if we are beyond the sequence

if end_ix+avanco > len(sequence)-1:

break

# gather input and output parts of the pattern

seq_x, seq_y = sequence[i:end_ix], sequence[end_ix+avanco]

e=seq_y

f=seq_x[-1]

faixa = ((e/f)-1)*100

if faixa <-15:

faixa=-3

elif faixa <-5:

faixa=-2

elif faixa <0:

faixa=-1

elif faixa <5:

faixa=1

elif faixa <15:

faixa=2

elif faixa >15:

faixa=3

X.append(seq_x)

y.append(faixa)

return array(X), array(y)

# define input sequence

raw_seq = b3sa3['Close']

# choose a number of time steps

n_steps = 365

avanco = 21

# split into samples

X, y = split_sequence(raw_seq, n_steps,avanco)

# reshape from [samples, timesteps] into [samples, timesteps, features]

n_features = 1

X6 = X.reshape((X.shape[0], X.shape[1], n_features))Convert to a binary array classified into 6 classes

num_classes = 6

y6 = keras.utils.to_categorical(y, num_classes)Fitting

# define model

model6 = Sequential()

model6.add(LSTM(100, activation='tanh'))

model6.add(Dense(6,activation='sigmoid'))

model6.compile(optimizer='adam', loss="categorical_crossentropy", metrics=['accuracy'])

# fit model

model6.fit(X6, y6, validation_split=0.2, epochs=10)Results

85/85 [==============================] - 11s 113ms/step - loss: 1.5843 - accuracy: 0.2848 - val_loss: 1.6382 - val_accuracy: 0.1689

Epoch 2/10

85/85 [==============================] - 9s 111ms/step - loss: 1.5677 - accuracy: 0.2800 - val_loss: 1.6800 - val_accuracy: 0.1689

Epoch 3/10

85/85 [==============================] - 9s 111ms/step - loss: 1.5623 - accuracy: 0.2941 - val_loss: 1.6359 - val_accuracy: 0.1689

Epoch 4/10

85/85 [==============================] - 9s 111ms/step - loss: 1.5513 - accuracy: 0.2963 - val_loss: 1.6746 - val_accuracy: 0.1748

Epoch 5/10

85/85 [==============================] - 9s 111ms/step - loss: 1.5483 - accuracy: 0.3078 - val_loss: 1.6489 - val_accuracy: 0.2356

Epoch 6/10

85/85 [==============================] - 9s 110ms/step - loss: 1.5505 - accuracy: 0.2952 - val_loss: 1.6026 - val_accuracy: 0.2193

Epoch 7/10

85/85 [==============================] - 9s 111ms/step - loss: 1.5391 - accuracy: 0.3200 - val_loss: 1.6281 - val_accuracy: 0.2504

Epoch 8/10

85/85 [==============================] - 10s 114ms/step - loss: 1.5384 - accuracy: 0.3230 - val_loss: 1.6312 - val_accuracy: 0.1911

Epoch 9/10

85/85 [==============================] - 10s 117ms/step - loss: 1.5185 - accuracy: 0.3330 - val_loss: 1.6505 - val_accuracy: 0.2430

Epoch 10/10

85/85 [==============================] - 10s 116ms/step - loss: 1.5229 - accuracy: 0.3274 - val_loss: 1.6121 - val_accuracy: 0.2533↑ So far

As you can see, the accuracy decreases as the number of classes increases.

I want these to be close to 100%

Actually improve accuracy

First, try the pattern of 6 classes

When the accuracy improves, let's reflect it on the 4 class classifications.

I tried many things, but the answer was simple and clear.

Here's what I tried and the results

・ Try simply increasing the amount of data → I couldn't increase the data in the first place because I only have stock data from 2007

・ Try increasing the feature (including 'Open', 'High', 'Low' in addition to 'Close') → The accuracy remained the same.

・ Try increasing the n_steps → The accuracy remained the same.

Experiment with different values of the avanco "parameter" → the accuracy remained the same

・ RNN used → accuracy decreased

・ Increase the epoch number → It took time, but the accuracy increased from 0.2533 to 0.4655

・ In the first place, since it is a different class classification, Dense's activation was changed to softmax instead of sigmoid → For some reason, the accuracy did not change.

Results

I tried various other things, but the limit is 0.4655 in 6 class classifications.

However, when we tried 4 classes with the same epoch number and model, the accuracy increased from 0.6063 to 0.7104.

It is said that it is good if it is 0.8 or more.

Because it is an irregular field of stocks

Isn't it accurate enough for analytical data?

Final changes

・ Prevent overfitting with Dropout

・ Since it is a different classification, change the activation of Dense from sigmoid to softmax.

Increase the number of epochs by 10.

Before

model = Sequential()

model.add(LSTM(100, activation='tanh'))

model.add(Dense(num_classes, activation='sigmoid'))

model.compile(optimizer='adam', loss="categorical_crossentropy", metrics=['accuracy'])

model6.fit(X, y, validation_split=0.2, epochs=10)After

model = Sequential()

model.add(LSTM(units=128, input_shape=(n_steps, n_features)))

model.add(Dropout(0.2))

model.add(Dense(units=num_classes, activation='softmax'))

model.compile(optimizer='adam', loss='categorical_crossentropy', metrics=['accuracy'])

model.fit(X6, y6, batch_size=32, epochs=100, validation_split=0.2)Summary

This time, the accuracy was improved by simply increasing the epoch number.

Even machine learning

Performance and quality that humans modify are an essential part

Accuracy is probably the best measure to evaluate it.

Also, to improve accuracy

Looking for a better algorithm

Inquisitiveness and curiosity are also necessary for engineers.

Call for requests

We are also looking for your requests and questions.